The neural network offers a potent tool for scientific research in the era of massive data. However, due to their predominantly black box nature, explaining their principles and optimizing computational efficiency can be challenging. Therefore, providing mathematical theoretical support for the approximation effect or expressive capability of neural networks has always been a key challenge that researchers strive to overcome. As the fundamental theoretical foundation of deep learning, the universal approximation theorem of multi-layer perceptron was introduced for the first time to describe the approximation ability of neural networks towards arbitrary functions. In recent years, various types of neural networks have been extensively proposed and developed. Among them, the reservoir computing model - a special variant of recurrent neural network - has garnered significant attention due to its characteristics resembling those found in real biological brains: low computing cost and easy physical implementation. This architecture particularly excels in reconstructing and predicting dynamic systems with temporal evolution. Notably, this architecture stands out by randomly assigning parameters to both input layer and reservoir pool layer without training; training costs are only incurred during least squares fitting of output layer parameters.

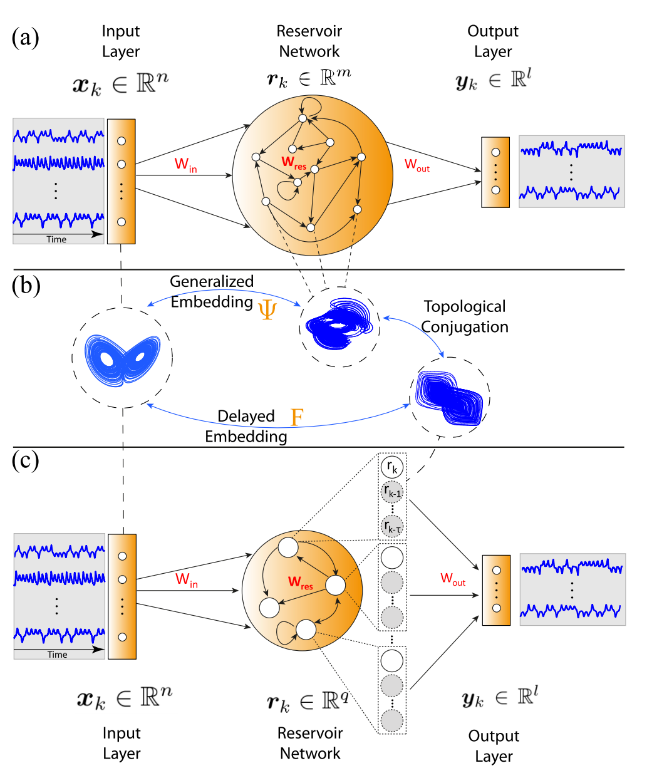

The research, published online in May 2023 in Physical Review Research (Volume 5, Article No. L022041), presents a rigorous theoretical characterization by Professor Lin Wei's team from the Research Institute of Intelligent Complex Systems of Fudan University and Professor Ma Huanfei's team from the School of Mathematical Sciences at Soochow University. They investigate the approximation ability of the reserve pool computational model to real dynamic systems. This study considers the state of neurons within the reserve pool as an observation representing various perspectives on real-world evolution, thus validating the reserve pool state update equation as an embedding mechanism for external evolution into internal states. The development of this theory enhances the theoretical analysis of storage pool computing architecture. Moreover, leveraging the observational equivalence between time delay and spatial distribution provided by Takens' embedding theorem, a novel storage pool computing architecture is proposed where temporal information can be transformed into spatial information and vice versa. By utilizing past time information instead of increasing neuron count, a significantly lighter architecture is achieved. Interestingly, under certain circumstances, considerable reconstruction and prediction effects can be accomplished with just one neuron (along with its past time information).

图:基于时空信息互化的轻量级储备池神经网络架构

The proposed computational architecture has been empirically validated on multiple models and datasets, offering an effective approach to reduce the computational overhead of neural networks based on rigorous mathematical theories. Moreover, it facilitates the practical implementation of neural networks. Lin Wei, Ma Huanfei, Leng Siyang, and other team members have dedicated years to the development of applied mathematics and complex system theory, aiming to address practical challenges in the interdisciplinary fields of artificial intelligence and data science. Professor Ma Huanfei and Professor Lin Wei from Soochow University serve as co-corresponding authors for this paper. This research has received funding and support from the National Natural Science Foundation of China, Shanghai Artificial Intelligence Laboratory, and Shanghai Municipal Science and Technology Major Projects.